Continuous Delivery & GitOps FAQs

This article addresses some frequently asked questions about Harness Continuous Delivery & GitOps.

For an overview of Harness' support for platforms, methodologies, and related technologies, go to Supported platforms and technologies.

For a list of CD supported platforms and tools, go to CD integrations.

For an overview of Harness concepts, see Learn Harness' key concepts.

General FAQs

How does Harness calculate pricing for CD?

See Service-based licensing and usage for CD

My definition of a service differs from the above standard definition. How will pricing work in my case?

Harness allows deployment of various custom technologies such as Terraform scripts, background jobs, and other non-specified deployments. These require custom evaluation to assess the correct Licensing model. Please contact the Harness Sales team to discuss your specific technologies and deployment use cases.

See the Pricing FAQ at Harness pricing.

Are there other mechanisms to license Harness CD beyond services?

See the Pricing FAQ at Harness pricing.

Yes, we are happy to have Harness Sales team work with you and understand the specifics of what you are trying to achieve and propose a custom licensing/pricing structure.

Do unused/stale services consume a license?

See the Pricing FAQ at Harness pricing.

Harness CD actively tracks and provides visibility into all active services that consume a license.

An active service is defined as a service that has been deployed at least once in the last 30 days. A service deemed inactive (no deployments in the last 30 days), does not consume a license.

How will I know if I am exceeding my licensed service usage?

See the Pricing FAQ at Harness pricing.

Harness CD has built-in license tracking and management dashboards that provide you real-time visibility into your license allocation and usage.

If you notice that you are nearing or exceeding your licensed services, please get in touch with Harness Sales team to plan ahead and ensure continued usage and compliance of the product.

How many users can I onboard onto Harness CD? Is there a separate pricing for Users?

Harness CD has been designed to empower your entire Engineering and DevOps organization to deploy software with agility and reliability. We do not charge for users who onboard Harness CD and manage various aspects of the deployment process, including looking through deployment summaries, reports, and dashboards. We empower users with control and visibility while pricing only for the actual ‘services’ you deploy as a team.

If I procure a certain number of service licenses on an annual contract, and realize that more licenses need to be added, am I able to procure more licenses mid-year through my current contract?

See the Pricing FAQ at Harness pricing.

Yes, Harness Sales team is happy to work with you and help fulfill any Harness-related needs, including mid-year plan upgrades and expansions.

If I procure a certain number of service licenses on an annual contract, and realize that I may no longer need as many, am I able to reduce my licenses mid-year through my current contract?

See the Pricing FAQ at Harness pricing.

While an annual contract can not be lowered mid-year through the contract, please contact us and we will be very happy to work with you. In case you are uncertain at the beginning of the contract of how many licenses should be procured - you can buy what you use today to start and expand mid-year as you use more. You can also start with a monthly contract and convert to an annual subscription.

What if I am building an open source project?

We love Open Source and are committed to supporting our Community. We recommend the open-source Gitness for hosting your source code repository as well as CI/CD pipelines.

Contact us and we will be happy to provide you with a no restriction SaaS Plan!

What if I add more service instance infrastructure outside of Harness?

See the Pricing FAQ at Harness pricing.

If you increase the Harness-deployed service instance infrastructure outside of Harness, Harness considers this increase part of the service instance infrastructure and licensing is applied.

When is a service instance removed?

If Harness cannot find the service instance infrastructure it deployed, it removes it from the Services dashboard.

If Harness cannot connect to the service instance infrastructure, it will retry until it determines if the service instance infrastructure is still there.

If the instance/pod is in a failed state does it still count towards the service instance count?

Harness performs a steady state check during deployment and requires that each instance/pod reach a healthy state.

A Kubernetes liveness probe failing later would mean the pod is restarted. Harness continues to count it as a service instance.

A Kubernetes readiness probe failing later would mean traffic is no longer routed to the pods. Harness continues to count pods in that state.

Harness does not count an instance/pod if it no longer exists. For example, if the replica count is reduced.

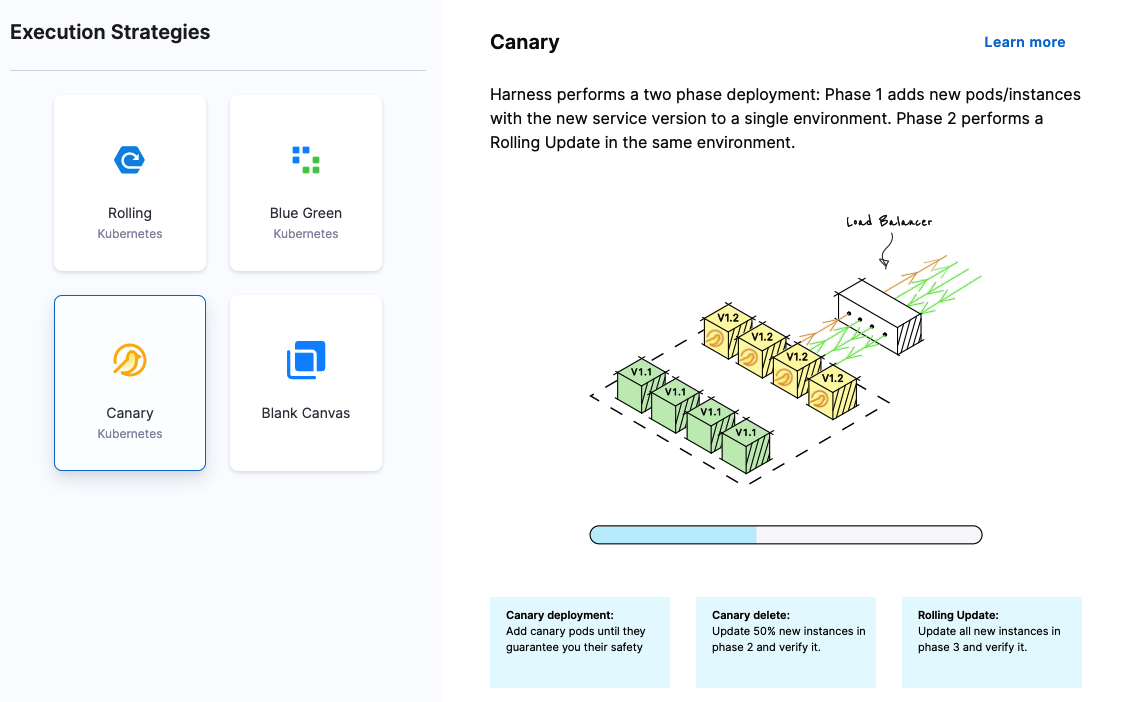

What deployment strategies can I use?

Harness supports all deployment strategies, such as blue/green, rolling, and canary.

See Deployment concepts and strategies

How do I filter deployments on the Deployments page?

You can filter deployments on the the Deployments page according to multiple criteria, and save these filters as a quick way to filter deployments in the future.

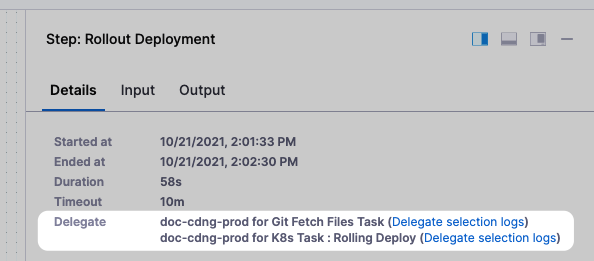

How do I know which Harness Delegates were used in a deployment?

Harness displays which Delegates performed each task in the Details of each step.

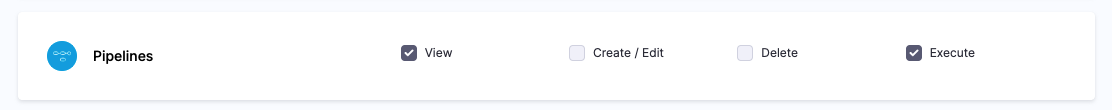

Can I restrict deployments to specific User Groups?

Yes, you can enable the Role permission Pipeline Execute and then apply that Role to specific User Groups.

See Manage roles.

Can I deploy a service to multiple infrastructures at the same time?

Each stage has a service and target Infrastructure. If your Pipeline has multiple stages, you can deploy the same service to multiple infrastructures.

See Define your Kubernetes target infrastructure.

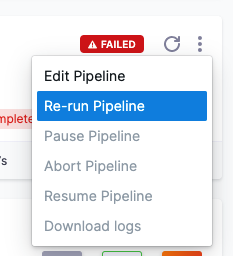

Can I re-run a failed deployment?

Yes, select Re-run Pipeline.

How to handle the scenario where powershell scripts does not correctly return the status code on failure ?

Though it is an issue with Powershell where it does not return the error code correctly we need this for our step to proceed further and reflect the status correctly. Consider wrapping the code like below in the script:

$ErrorActionPreference = [System.Management.Automation.ActionPreference]::Stop

<execution code>

exit $LASTEXITCODE

Can we persist variables in the pipeline after the pipeline run is completed ?

We do not persist the variables and the variables are only accessible during the context of execution. You can make api call to write it as harness config file and later access the Harness file or alternatively you have a config file in git where you can push the var using a shell script and later access the same config file.

How do I access one pipeline variables from another pipeline ?

Directly, it may not be possible.

As a workaround, A project or org or account level variable can be created and A shell script can be added in the P1 pipeline after the deployment which can update this variable with the deployment stage status i.e success or failure then the P2 pipeline can access this variable and perform the task based on its value.

The shell script will use this API to update the value of the variable - https://apidocs.harness.io/tag/Variables#operation/updateVariable

Why some data for the resource configurations returned by api are json but not the get pipeline detail api ?

The reason the get api call for pipeline is returning a yaml because the pipeline is stored as yaml in harness. As this api call is for fetching the pipeline hence it is returning the yaml definition of the pipeline and not the json. If still you need json representation of the output you can use a parser like yq to convert the response.

How to exit a workflow without marking it as failed

You can add a failure strategy in the deploy stage by either ignoring the failure for the shell script or getting a manual intervention where you can mark that step as a success.

2 Deployments in pipeline, is it possible for me to rollback the stage 1 deployment if the stage 2 tests returned errors?

We do have a pipeline rollback feature that is behind a feature flag. This might work better as you would be able to have both stages separate, with different steps, as you did before, but a failure in the test job stage could roll back both stages.

Also, for the kubernetes job, if you use the Apply step instead of Rollout then the step will wait for the job to complete before proceeding, and you would not need the Wait step.

CDNG Notifications custom slack notifications

It is possible to create a shell script that sends notifications through Slack, in this case, we can refer to this article:

https://discuss.harness.io/t/custom-slack-notifications-using-shell-script/749

Creation of environment via API?

We do support API's for the nextgen : https://apidocs.harness.io/tag/Environments#operation/createEnvironmentV2

curl -i -X POST \

'https://app.harness.io/ng/api/environmentsV2?accountIdentifier=string' \

-H 'Content-Type: application/json' \

-H 'x-api-key: YOUR_API_KEY_HERE' \

-d '{

"orgIdentifier": "string",

"projectIdentifier": "string",

"identifier": "string",

"tags": {

"property1": "string",

"property2": "string"

},

"name": "string",

"description": "string",

"color": "string",

"type": "PreProduction",

"yaml": "string"

}'

Download artifact for winrm is not working while Nexus if windows machine is behind proxy in CG

Nexus is supported for NG but not in CG, so you can use custom powershell script something like below: Invoke-WebRequest -Uri "${URI}" -Headers $Headers -OutFile "${OUT_FILE}" -Proxy "$env:HTTP_PROXY"

How can we automatically create a new service whenever a new service yaml is uploaded to my source repo?

We can create a pipeline with api call for service creation and in that pipeline we can add a trigger to our source repo where service yaml is uploaded. Now whenever there will be a new service yaml the pipeline will get triggered and we can fetch this new service yaml using git cli in the shell step and use the yaml to make the api call for service creation.

How do I use all environments and only select infrastructure for multiple environment deployments?

Use filtered lists for this purpose. You can specify "Filter on Entities" as Environment in the first filter and select "Type" as all. Now for the infrastructure you can add another filter and provide the tag filter.

How do I list Github Tags for custom artifact when the curl returns a json array without any root element?

We cannot provide an array directly to the custom artifact. It needs a root element to parse the json response.

How to use the Stage Variable inside the Shell Script?

A variable expression can be used to access stage variables in pipelines. Just hover over your variable name, and you will see an option to copy the variable expression path, You can reference this path in shell script.

How can we return dynamically generated information to a calling application upon the successful completion of pipelines initiated by API calls from other applications?

You can configure pipeline outputs throughout the stages to include all the data you want to compile. Then, upon execution completion, you can include a shell script that references these outputs and sends the compiled information to the desired API.

Can we get details what branch did trigger the pipeline and who did it; the time the pipeline failed or terminated, while using Microsoft Teams Notification

These details are not available by default as only (status, time, pipeline name url etc) is only sent and if you need these details might ned to use custom shell script

How to pass list of multiple domains for allowing whitelisting while using api ?

Domain whitelisting api takes domain as input array. So if we have multiple domains to be passed this needs to be done as coma separeted string entries in the array. Below is a sample for the same:

curl -i -X PUT \

'https://app.harness.io/ng/api/authentication-settings/whitelisted-domains?accountIdentifier=xxxx' \

-H 'Content-Type: application/json' \

-H 'x-api-key: REDACTED' \

-d '["gmail.com","harness.io"]'

I have a pipeline containing different stages DEV-QA-UAT-PROD. In UAT I'm using Canary deployment and in PROD it's Blue-Green. In these scenarios how Harness provides proper Roll Back strategies?

Harness provides a declarative rollback feature that can perform rollbacks effectively in different deployment scenarios.

For Canary deployment in UAT, you can define the percentage of traffic to route to the new version and set up conditions to switch traffic between the old and new versions. If an anomaly is detected during the canary deployment, Harness will automatically trigger a rollback to the previous version.

For Blue-Green deployment in PROD, you can define the conditions to switch traffic between the blue and green environments. If an issue is detected in the green environment, you can easily switch back to the blue environment using the declarative rollback feature.

You can define the failure strategy on stages and steps in your pipeline to set up proper rollback strategies. You can add a failure strategy in the deploy stage by either ignoring the failure for the shell script or getting a manual intervention where you can mark that step as a success. Additionally, you can use the declarative rollback feature provided by Harness to perform rollbacks effectively in different deployment scenarios.

How to pass the dynamic tag of the image from the CI pipeline to the CD Pipeline to pull the image.

A project or org or account level variable can be created and A shell_script/Run Step can be added in the P1 pipeline to export or output the required variable then the P2 pipeline can access this variable and perform the task based on its value.

The shell script will use this API to update the value of the variable.

Where can one find the API request and response demo for execution of Pipeline with Input Set ?

One can use the below curl example to do so :

curl -i -X POST \

'https://app.harness.io/pipeline/api/pipeline/execute/{identifier}/inputSetList?accountIdentifier=string&orgIdentifier=string&projectIdentifier=string&moduleType=string&branch=string&repoIdentifier=string&getDefaultFromOtherRepo=true&useFQNIfError=false¬esForPipelineExecution=' \

-H 'Content-Type: application/json' \

-H 'x-api-key: YOUR_API_KEY_HERE' \

-d '{

"inputSetReferences": [

"string"

],

"withMergedPipelineYaml": true,

"stageIdentifiers": [

"string"

],

"lastYamlToMerge": "string"

}'

Please read more on this in the following documentation on Execute a Pipeline with Input Set References.

How do we pass the output list of first step to next step looping strategy "repeat", the output can be a list or array which needs to be parsed ?

The Output Variable of the shell script is a string, which you are trying to pass as a list of strings, to avoid this :

- First you need to convert your array list into a string and then pass it as an output variable.

- Then convert this string into a list of string again before passing it to the repeat strategy.

Please read more on this in the following Documentation.

I need to run my step in delegate host?

You can create a shell script and select option as execute on delegate under Execution Target

How to fetch files from the harness file store in the run step?

To fetch files from the Harness file store in a Run step, you can use the following example:

- step:

type: Run

name: Fetch Files from File Store

identifier: fetch_files

spec:

shell: Sh

command: |

harness file-store download-file --file-name <file_name> --destination <destination_path>

Replace "filename" with the name of the file you want to fetch from the file store, and "destinationpath" with the path where you want to save the file on the target host.

Does Harness supports multiple IaC provisioners?

Harness does support multiple Iac provisioners, few examples are terraform, terragrunt, cloud formation, shell script provisioning etc.

How do I setup a Pipeline Trigger for Tag and Branch creation in Github?

The out of the box Github Trigger type does not currently support this however, you can use a Custom Webhook trigger and follow the below steps in order to achieve this.

- Create a Custom Webhook trigger

- Copy the Webhook URL of the created trigger

- Configure a Github Repository Webhook pasting in the URL copied from Step 2 in the Payload URL

- Set the content type to

application/json - Select

Let me select individual events.for theWhich events would you like to trigger this webhook?section - Check the

Branch or tag creationcheckbox

What are reserved symbols in PowerShell, and how do I handle them in Harness secrets in Powershell scripts?

Symbols such as |, ^, &, <, >, and % are reserved in PowerShell and can have special meanings. It's important to be aware of these symbols, especially when using them as values in Harness secrets.

If a reserved symbol needs to be used as a value in a Harness secret for PowerShell scripts, it should be escaped using the ^ symbol. This ensures that PowerShell interprets the symbol correctly and does not apply any special meanings to it.

The recommended expression to reference a Harness secret is <+secrets.getValue('secretID')>. This ensures that the secret value is obtained securely and without any issues, especially when dealing with reserved symbols.

Which API is utilized for modifying configuration in the update-git-metadata API request for pipelines?

Please find an example API call below:

curl --location --request PUT 'https://app.harness.io/gateway/pipeline/api/pipelines/<PIPELINE_IDENTIFIER>/update-git-metadata?accountIdentifier=<ACCOUNT_ID>&orgIdentifier=<ORG_ID>&projectIdentifier=<PROJECT_IDENTIFIER>&connectorRef=<CONNECTOR_REF_TO_UPDATE>&repoName=<REPO_NAME_TO_UPDATE>&filePath=<FILE_PATH_TO_UPDATE>' \

-H 'x-api-key: <API_KEY>' \

-H 'content-type: application/json' \

Please read more on this in the following Documentation

How do I perform iisreset on a Windows machine?

You can create a WinRM connector and use a powershell script to perform the iisreset. Make sure the user credentials used for the connection have admin access.

If the assigned delegate executing a task goes down does the task gets re-assigned to other available delegates?

If a delegate fails or disconnects, then the assigned task will fail. We do not perform the re-assignment. If the step is idempotent then we can use a retry strategy to re-execute the task.

If the "All environments" option is used for a multiple environment deployment, why can we not specify infrastructure?

When the "All environments" option is selected we do not provide infrastructure selection in the pipeline editor. The infrastructure options are available in the run form.

We have an updated manifest file for deployment, but delegate seems to be fetching old manifest. How can we update this?

You can clear the local cached repo.

Local repository is stored at /opt/harness-delegate/repository/gitFileDownloads/Nar6SP83SJudAjNQAuPJWg/<connector-id>/<repo-name>/<sha1-hash-of-repo-url>.

Can we get the pipeline execution url from the custom trigger api response?

The custom trigger api response contains a generic url for pipeline execution and not the exact pipeline execution. If we need the exact pipeline execution for any specific trigger we need to use the trigger activity page.

Does Harness offer a replay feature similar to Jenkins?

Yes, Harness provides a feature similar to Jenkins' Replay option, allowing you to rerun a specific build or job with the same parameters and settings as the previous execution. In Harness, this functionality is known as Retry Failed Executions. You can resume pipeline deployments from any stage or from a specific stage within the pipeline.

To learn more about how to utilize this feature in Harness, go to Resume pipeline deployments documentation.

How can I handle uppercase environment identifiers in Harness variables and deploy pipelines?

Harness variables provide flexibility in managing environment identifiers, but dealing with uppercase identifiers like UAT and DR can pose challenges. One common requirement is converting these identifiers to lowercase for consistency. Here's how you can address this:

-

Using Ternary Operator: While if-else statements aren't directly supported in variables, you can leverage the ternary operator to achieve conditional logic.

-

Updating Environment Setup: Another approach is to update your environment setup to ensure identifiers like UAT and DR are stored in lowercase. By maintaining consistency in the environment setup, you can avoid issues with case sensitivity in your deployment pipelines.

What does "buffer already closed for writing" mean?

This error occurs in SSH or WinRM connections when some command is still executing and the connection is closed by the host. It needs further debugging by looking into logs and server resource constraints.

Where do I get the metadata for the Harness download/copy command?

This metadata is detected in the service used for the deployment. Ideally, you would have already configured an artifact, and the command would use the same config to get the metadata.

Can I use SSH to copy an artifact to a target Windows host?

If your deployment type is WinRM, then WinRM is the default option used to connect to the Windows host.

Why doesn't the pipeline skip steps in a step group when another step in the group fails?

If you want this to occur, you neeed to define a conditional execution of <+stage.liveStatus> == "SUCCESS" on each step in the group.

Why am I getting an error that the input set does not exist in the selected Branch?

This happens because pipelines and input sets need to exist in the same branch when storing them in Git. For example, if your pipeline exists in the dev branch but your input set exists in the main branch, then loading the pipeline in the dev branch and attempting to load the input set will cause this error. To fix this, please ensure that both the pipeline and input set exist in the same branch and same repository.

When attempting to import a .yaml file from GitHub to create a new pipeline, the message This file is already in use by another pipeline is displayed. Given that there are no other pipelines in this project, is there a possibility of a duplicate entry that I may not be aware of?

It's possible that there are two pipeline entities in the database, each linked to the same file path from the Harness account and the GitHub URL. Trying to import the file again may trigger the File Already Imported pop-up on the screen. However, users can choose to bypass this check by clicking the Import button again.

How can you seamlessly integrate Docker Compose for integration testing into your CI pipeline without starting from scratch?

Run services for integration in the background using a docker-compose.yaml file. Connect to these services via their listening ports. Alternatively, while running docker-compose up in CI with an existing docker-compose.yaml is possible, it can complicate the workflow and limit pipeline control, including the ability to execute each step, gather feedback, and implement failure strategies.

What lead time do customers have before the CI starts running the newer version of images?

Customers typically have a one-month lead time before the CI starts running the newer versions of images. This allows them to conduct necessary tests and security scans on the images before deployment.

Can I export my entire FirstGen deployment history and audit trail from Harness?

You can use the following Harness FirstGen APIs to download your FirstGen audit trial and deployment history:

Why does a remote input set need a commit message input?

Harness requires a commit message so Harness can store the input set YAML in your Git Repo by making a commit to your Git repo.

What is the difference between "Remote Input Set" and "Import Input Set from Git"?

Remote Input Set is used when you create an input set and want to store it remotely in SCM.

Import Input Set from Git it is used when you already have an input set YAML in your Git repo that you want to import to Harness. This is a one-time import.

Why does the deleted service remain shown on the overview?

The dashboard is based on historical deployment data based on the selected time frame. Once the deleted service is not present in the selected time frame it will stop showing up on the dashboard.

In the overview page why Environments always showed 0 when the reality there are some environments?

The overview page offers a summary of deployments and overall health metrics for the project. Currently, the fields are empty as there are no deployments within the project. Once a deployment is in the project, these fields will be automatically updated.

What is the log limit of CI step log fetch step and how can one export the logs ?

Harness deployment logging has the following limitations:

- A hard limit of 25MB for an entire step's logs. Deployment logs beyond this limit are skipped and not available for download. The log limit for Harness CI steps is 5MB, and you can export full CI logs to an external cache.

- The Harness log service has a limit of 5000 lines. Logs rendered in the Harness UI are truncated from the top if the logs exceed the 5000 line limit.

Does Harness support any scripts available to migrate GCR triggers to GAR ?

No, one can create a script and use the api to re-create them. Please read more on this in our API Docs.

Please read more on this in the following Documentation on logs and limitations and Truncated execution logs.

In a Helm deployment with custom certificates, what is essential regarding DNS-compliant keys? ? How should delegates be restarted after modifying the secret for changes to take effect ?

Please follow below suggestions:

- Ensure that the secret containing custom certificates adheres strictly to DNS-compliant keys, avoiding underscores primarily. Following any modification to this secret, it is imperative to restart all delegates to seamlessly incorporate the changes.

- Helm Installation Command:

helm upgrade -i nikkelma-240126-del --namespace Harness-delegate-ng --create-namespace \

Harness-delegate/Harness-delegate-ng \

--set delegateName=nikkelma-240126-del \

--set accountId=_specify_account_Id_ \

--set delegateToken=your_Delegatetoken_= \

--set managerEndpoint=https://app.Harness.io/gratis \

--set delegateDockerImage=Harness/delegate:version_mentioned \

--set replicas=1 --set upgrader.enabled=true \

--set-literal destinationCaPath=_mentioned_path_to_destination \

--set delegateCustomCa.secretName=secret_bundle

- CA Bundle Secret Creation (Undesirable):

kubectl create secret generic -n Harness-delegate-ng ca-bundle --from-file=custom_certs.pem=./local_cert_bundle.pem

- CA Bundle Secret Creation (Desirable, no underscore in first half of from-file flag):

kubectl create secret generic -n Harness-delegate-ng ca-bundle --from-file=custom-certs.pem=./local_cert_bundle.pem

Please read more on Custom Certs in the following Documentation.

Can we use Continuous Verification inside CD module without any dependency of SRM ?

Yes, one can set up a Monitored Service in the Service Reliability Management module or in the Verify step in a CD stage.

Please read more on this in the following Documentation.

How do I create a Dashboard in NG, which shows all the CD pipelines which are executing currently, in real-time ?

You can use the "status" field in dashboards to get the status of the deployments.

How is infra key formed for deployments.

The Infrastructure key (the unique key used to restrict concurrent deployments) is now formed with the Harness account Id + org Id + project Id + service Id + environment Id + connector Id + infrastructure Id.

What if the infra key formed in case when account Id + org Id + project Id + service Id + environment Id are same and the deployments are getting queued because of it.

To make the deployment work you can :

- Add a connector in the select host field and specify the host.

- Change the secret identifier (create a new with same key but different identifier).

I have a terraform code which I will need to use it deploy resources for Fastly service. And, I would like to know should I create a pipeline in CI or CD module and what's the reasoning behind it?

The decision on whether to create your pipeline in the Continuous Deployment (CD) module or Continuous Integration (CI) module depends on your specific use case and deployment strategy.

If your goal is to automate the deployment of infrastructure whenever there are changes in your code, and you are using Terraform for provisioning, it is advisable to create a pipeline in the CD module. This ensures that your application's infrastructure stays current with any code modifications, providing seamless and automated deployment.

Alternatively, if your use of Terraform is focused on provisioning infrastructure for your CI/CD pipeline itself, it is recommended to establish a pipeline in the CI module. This allows you to automate the provisioning of your pipeline infrastructure, ensuring its availability and keeping it up-to-date.

In broad terms, the CI module is typically dedicated to building and testing code, while the CD module is designed for deploying code to production. However, the specific use case and deployment strategy will guide your decision on where to create your pipeline.

It's worth noting that you also have the option to incorporate both types of processes within a single pipeline, depending on your requirements and preferences.

Is there a way to get notified whenever a new pipeline is created?

No, As per the current design it's not possible.

Does harness support polling on folders?

We currently, do not support polling on folders. We have an open enhancement request to support this.

How do I filter out Approvals for Pipeline Execution Time in Dashboards?

You can get the Approval step duration from the Deployments and Services V2 data source.

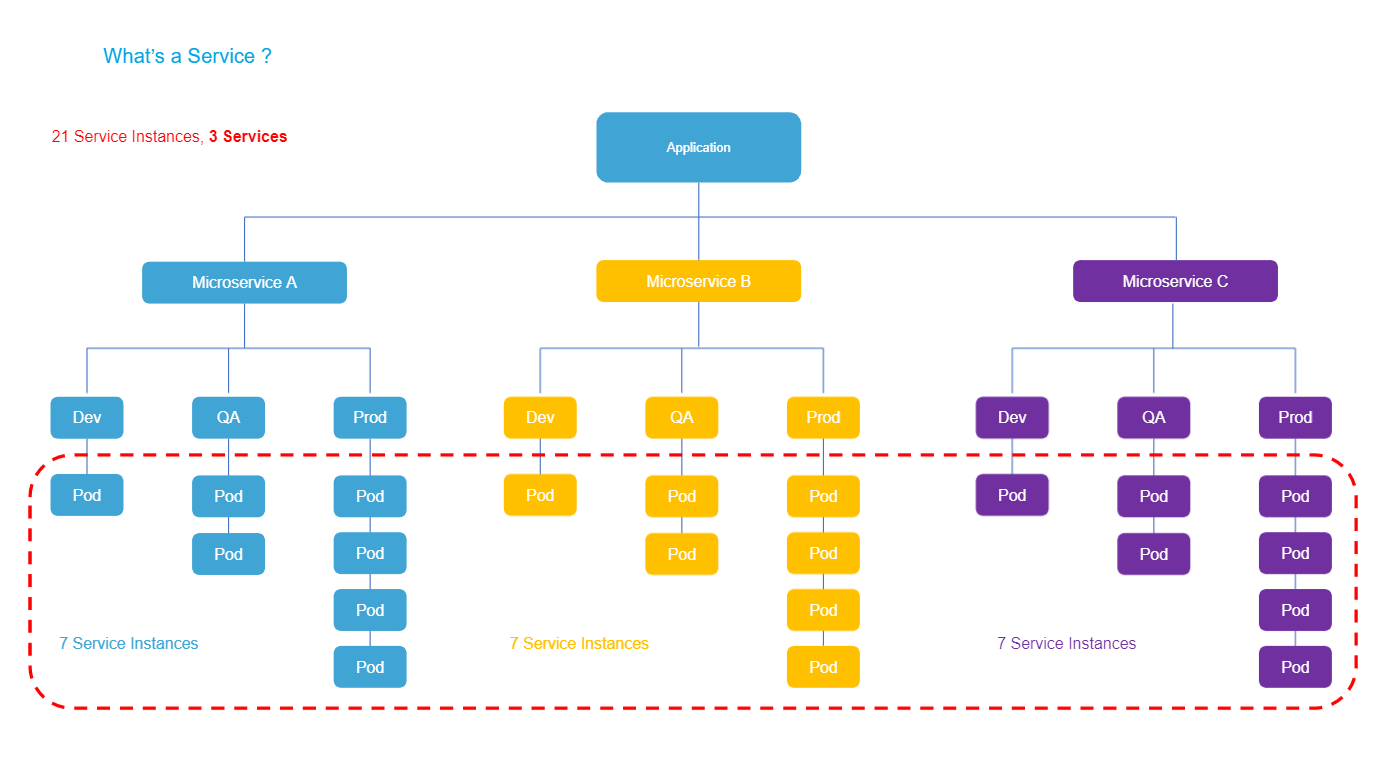

What is a service instance in Harness?

A service is an independent unit of software you deploy through Harness CD pipelines.

This will typically map to a service in Kubernetes apps, or to an artifact you deploy in traditional VM-based apps.

Service instances represent the dynamic instantiation of a service you deploy with Harness.

For example, for a service representing a Docker image, service instances are the number of pods running the Docker image.

Notes:

- For services with more than 20 service instances - active pods or VMs for that service - additional service licenses will be counted for each 20 service instances. This typically happens when you have large monolith services.

- See the Pricing FAQ at Harness pricing.

What are organizations and projects?

Harness organizations (orgs) allow you to group projects that share the same goal. For example, all projects for a business unit or division.

Within each org you can add several Harness projects.

A Harness project contains Harness pipelines, users, and resources that share the same goal. For example, a project could represent a business unit, division, or simply a development project for an app.

Think of projects as a common space for managing teams working on similar technologies. A space where the team can work independently and not need to bother account admins or even org admins when new entities like connectors, delegates, or secrets are needed.

Much like account-level roles, project members can be assigned project admin, member, and viewer roles

What is a Harness pipeline?

Typically, a pipeline is an end-to-end process that delivers a new version of your software. But a pipeline can be much more: a pipeline can be a cyclical process that includes integration, delivery, operations, testing, deployment, real-time changes, and monitoring.

For example, a pipeline can use the CI module to build, test, and push code, and then a CD module to deploy the artifact to your production infrastructure.

What's a Harness stage?

A stage is a subset of a pipeline that contains the logic to perform one major segment of the pipeline process. Stages are based on the different milestones of your pipeline, such as building, approving, and delivering.

Some stages, like a deploy stage, use strategies that automatically add the necessary steps.

What are services in Harness?

A service represents your microservices and other workloads logically.

A service is a logical entity to be deployed, monitored, or changed independently.

What are service definitions?

When a service is added to the stage in a pipeline, you define its service definition. Service definitions represent the real artifacts, manifests, and variables of a service. They are the actual files and variable values.

You can also propagate and override a service in subsequent stages by selecting its name in that stage's service settings.

What artifacts does Harness support?

Harness supports all of the common repos.

See Connect to an artifact repo.

What's a Harness environment?

Environments represent your deployment targets logically (QA, production, and so on). You can add the same environment to as many stages as you need.

What are Harness infrastructure definitions?

Infrastructure definitions represent an environment's infrastructure physically. They are the actual clusters, hosts, and so on.

What are Harness connectors?

Connectors contain the information necessary to integrate and work with third-party tools.

Harness uses connectors at pipeline runtime to authenticate and perform operations with a third-party tool.

For example, a GitHub connector authenticates with a GitHub account and repo and fetches files as part of a build or deploy stage in a pipeline.

See Harness Connectors how-tos.

How does Harness manage secrets?

Harness includes built-in secrets management to store your encrypted secrets, such as access keys, and use them in your Harness account. Harness integrates will all popular secrets managers.

See Harness secrets management overview.

Can I reference settings using expressions?

Yes. Everything in Harness can be referenced by a fully qualified name (FQN). The FQN is the path to a setting in the YAML definition of your pipeline.

See Built-in Harness variables reference.

Can I enter values at runtime?

Yes. You can use runtime Inputs to set placeholders for values that will be provided when you start a pipeline execution.

See Fixed values, runtime inputs, and expressions.

Can I evaluate values at run time?

Yes. With expressions, you can use Harness input, output, and execution variables in a setting.

All of these variables represent settings and values in the pipeline before and during execution.

At run time, Harness will replace the variable with the runtime value.

See Fixed Values, runtime inputs, and expressions.

Error evaluating certain expressions in a Harness pipeline

Some customers have raised concerns about errors while trying to evaluable expressions (example: <+pipeline.sequenceId>) while similar expressions do get evaluated. In this case the concatenation in the expression /tmp/spe/<+pipeline.sequenceId> is not working because a part of expression <+pipeline.sequenceId> is integer so the concatenation with /tmp/spec/ is throwing error because for concat, both the values should be string only.

So we can invoke the toString() on the integer value then our expression should work. So the final expression would be /tmp/spe/<+pipeline.sequenceId.toString()>.

How to carry forward the output variable when looping steps?

If you are using looping strategies on steps or step groups in a pipeline, and need to carry forward the output variables to consecutive steps or with in the loop, you can use <+strategy.iteration> to denote the iteration count.

For example, assume a looping strategy is applied to a step with the identifier my_build_step. which has an output variable my_variable The expression <+pipeline.stages.my_build_step.output.outputVariables.my_variable> won't work. Instead, you must append the index value to the identifier in the expression, such as: <+pipeline.stages.my_build_step_0.output.outputVariables.my_variable>

If you are using with in the loop you can denote the same as <+pipeline.stages.my_build_step_<+strategy.iteration>.output.outputVariables.my_variable>

See Iteration Counts

How do I get the output variables from pipeline execution using Harness NG API?

We have an api to get the pipeline summary:

https://apidocs.harness.io/tag/Pipeline-Execution-Details#operation/getExecutionDetailV2

If you pass the flag renderFullBottomGraph as true to this api it also gives you the output variables in the execution. You can parse the response to get the output variables and use it accordingly.

We have multiple accounts, like sandbox and prod, and we want to move the developments from sandbox to prod easily. Is there a solution for this?

Absolutely! We recommend customers to use test orgs or projects for sandbox development. Our hierarchical separation allows them to isolate test cases from production workloads effectively.

For pipeline development concerns, we have a solution too. Customers can utilize our built-in branching support from GitX. You can create a separate branch for building and testing pipeline changes. Once the changes are tested and verified, you can merge the changes into their default branch.

Sandbox accounts are most valuable for testing external automation running against Harness, which helps in building or modifying objects. This way, you can test changes without affecting production environments.

Is there an environment variable to set when starting the container to force the Docker delegate to use client tool libs from harness-qa-public QA repo?

To achieve this, you need to create a test image that points to the harness-qa-public QA repository. This involves updating the Docker file with the appropriate path to the QA buckets.

If I delete an infrastructure definition after deployments are done to it, what are the implications other than potential dashboard data loss for those deployments ?

At the moment there is no dependency on the instance sync and infrastructure definition. Infrastructure definition is used only to generate infrastructure details. The instance sync is done for service and environment. Only in case if any these are deleted, the instance sync will stop and delete instances.

If you are using the default release name format in Harness FirstGen as release-${infra.kubernetes.infraId}, it's important to note that when migrating to Harness NextGen, you will need to replace ${infra.kubernetes.infraId} with the new expression. In Harness NextGen, a similar expression <+INFRA_KEY> is available for defining release names. However, it's crucial to understand that these expressions will resolve to completely different values compared to the expressions used in Harness FirstGen.

What is the procedure to take services backup?

We do not have any backup ability for services out of the box but you can take the backup of service YAMLs and use them later for creating service if there is any issue with the service.

What is Harness FirstGen Graphql API to create Harness pipelines in a specific application?

We do not have a way to create a new pipeline using Graphql in FirstGen. However, we do have APIs to create Harness pipelines in NextGen.

How can specific users be able to approve the deployment?��

You can create a user group of specific users and specify the same user group in the Approval stage so only those users can able to approve the execution.

For reference: Select Approvers.

Error when release name is too long.

In the deployment logs in Harness, you may get an error similar to this:

6m11s Warning FailedCreate statefulset/release-xxx-xxx create Pod release-xxx-xxx-0 in StatefulSet release-xxx-xxx failed error: Pod "release-xxx-xxx-0" is invalid: metadata.labels: Invalid value: "release-xxx-xxx-xxx": must be no more than 63 characters

This is an error coming from the kubernetes cluster stating that the release name is too long. This can be adjusted in the Environments section.

- Select the environment in question.

- Select infrastructure definitions, and select the name of the infrastructure definition.

- Scroll down and expand Advanced, and then modify the release name to be something shorter.

Where should I add label release: {{ .Release.Name }}?

For any manifest object which creates the pod, you have to add this label in its spec. Adding it in Service, Deployment, StatefulSet, and DaemonSet should be enough.

What does the release name mean in infrastructure?

The release name is used to create a harness release history object, which contains some metadata about the workloads. This helps us perform the steady state check.

Is it possible to apply notification rule on environment level for workflow failure/success?

Workflow notification strategy can only interpret Condition,Scope, and User Group fields. So, all the notification rules are applied on workflow level.

Is it possible to apply notification rule on environment level for workflow failure/success?

Workflow notification strategy can only interpret Condition,Scope, and User Group fields. So, all the notification rules are applied on workflow level.

How do you determine the number of service instances/licenses for our services?

We calculate service licenses based on the active service instances deployed in the last 30 days. This includes services from both successful and failed deployments. This includes if the step involving a service was skipped during a pipeline execution.

What is considered an active service instance for license calculation?

An active service instance is determined by finding the 95th percentile of the number of service instances of a particular service over a period of 30 days.

How are licenses consumed based on the number of service instances?

Each service license is equivalent to 20 active service instances. The number of consumed licenses is calculated based on this ratio.

Is there a minimum number of service instances that still consume licenses?

Yes, even if a service has 0 active instances, it still consumes 1 service license.

Are the licenses calculated differently for different types of services, such as CG and NG?

No, the calculation method remains the same for both CG (Current Generation) and NG (Next Generation) services.

Can you provide an example of how service licenses are calculated based on service instances?

Sure! An example of the calculation can be found in the following Documentation. This example illustrates how the number of service instances corresponds to the consumed service licenses.

Is on-demand token generation valid for both Vault's Kubernetes auth type and app role-based auth?

No, on-demand token generation is only valid for app role-based auth.

Can we use matrices to deploy multiple services to multiple environments when many values in services and environments are not hardcoded?

Yes, you can use matrices for deploying multiple services to multiple environments even if many values in services and environments are not hardcoded.

How to test Harness entities (service, infra, environment) changes through automation?

Harness by default will not let the user push something or create any entity which is not supported or is incorrect as our YAML validator always makes sure the entity is corrected in the right format.

You can use YAML lint to verify the YAML format of the entity. There is no way to perform testing (automation testing, unit testing, etc.) of Harness entities before releasing any change within those entities.

What happens when the limit of stored releases is reached?

When the limit of stored releases is reached, older releases are automatically cleaned up. This is done to remove irrelevant data for rollback purposes and to manage storage efficiently.

Can we use variables in the vault path to update the location dynamically based on environment?

A expression can be used in the URL, for example - Setting up a PATH variable in the pipeline and calling that variable in the get secret - echo "text secret is: " <+secrets.getValue(<+pipeline.variables.test>)>

How do I use OPA policy to enforce environment type for each deployment stage in a pipeline i.e. prod or pre-prod?

The infra details are passed as stage specs.

For example, to access the environment type, the path would be - input.pipeline.stages[0].stage.spec.infrastructure.environment.type

You will have to loop across all the stages to check its infra spec.

Do we support services and environments at the org level ?

Yes, we do. For more please refer this in following Documentation.

How can Harness detect if the sub-tickets in Jira are closed before the approval process runs?

The first step is to make API calls to the Jira issue endpoint. By inspecting the response from the API call, you can check if the 'subtask' field is populated for the main issue. Once you identify the subtask issue keys from the API response, you can create a loop to retrieve the status of each sub-ticket using their respective issue keys. This will allow you to determine if the sub-tickets are closed or not before proceeding with the approval process in Harness.

What am I not able to delete the template having an “Ad” string in between with adblocker installed?

It will happen due to an ad blocker extension installed on the user system. It will happen only for the template with 'Ad' in the name. For example, Sysdig AdHoc containing an “Ad” string. When this is sent in the API as a path or a query param, this will get blocked by the ad blocker.

These ad blockers have some rules for the URIs: if it contains strings like “advert”, “ad”, “double-click”, “click”, or something similar, they block it.

What are some examples of values that are not hardcoded in the deployment setup?

Some examples of values that are not hardcoded include chart versions, values YAMLs, infradef, and namespaces. These are currently treated as runtime inputs.

When querying the Harness Approval API, the approval details are returning with the message, No approval found for execution.

The API will only return Approval details if there are any approval step pending for approval. If there are no such executions currently, then its expected to return No Approval found for execution

Trigger another stage with inputs in a given pipeline?

You cannot do it if the stage is part of the same pipeline. However, using pipeline A and running a custom trigger script inside it can trigger the CI stage which is part of pipeline B.

Can I manipulate and evaluate variable expressions, such as with JEXL, conditionals or ternary operators?

Yes, there are many ways you can manipulate and evaluate expressions. For more information, go to Use Harness expressions.

Can I use ternary operators with triggers?

Yes, you can use ternary operators with triggers.

Can I customize the looping conditions and behavior?

Yes, Harness NextGen often offers customization options to define the loop exit conditions, maximum iteration counts, sleep intervals between iterations, and more information here.

At the organizational level, I aim to establish a user group to which I can assign a resource group containing numerous distinct pipelines across specific projects.

We don’t support specific pipeline selections for specific projects for an organization. But the user can limit the access to the projects by selecting specific projects as scopes to apply in the org level resource group.

How do I created a OPA policy to enforce environment type?

The infra details are passed as stage specs.

For example, to access the environment type, the path would be - input.pipeline.stages[0].stage.spec.infrastructure.environment.type

You will have to loop across all the stages to check its infra spec.

How to use spilt function on variable?

You can split on any delimiter and use index based access.

For example, if you have a variable with prod-environment-variable, you can use the below expression to get prod:

<+<+pipeline.variables.envVar>.split('-')[0]>

How to use the substring function on variable?

You can use the substring function when you need to pass the starting and end index.

For example, if you have a variable with prod-environment-variable, you can use the below expression to get prod:

<+<+pipeline.variables.envVar>.substring(0,3)>

How to pass value to a variable manually while running from UI if same pipeline is configured to run via trigger and using variable from trigger.

You can check the triggerType variable to identify if pipeline was invoked via trigger or manually and can use the following JEXL condition:

<+<+pipeline.triggerType>=="MANUAL"?<+pipeline.variables.targetBranch>:<+trigger.targetBranch>>

How to concatenate secrets with string?

You use either of the following expressions:

<+secrets.getValue("test_secret_" + <+pipeline.variables.envVar>)><+secrets.getValue("test_secret_".concat(<+pipeline.variables.envVar>))>

Can a non-git-sync'd pipeline consume a git-sync'd template from a non-default branch?

Yes, an inline pipeline can consume a template from non-default branch.

Reference specific versions of a template on a different branch from the pipeline.

While using Harness Git Experience for pipelines and templates, you can now link templates from specific branches.

Previously, templates were picked either from the same branch as the pipeline if both pipelines and templates were present in the same repository, or from the default branch of the repository if templates were stored in a different repository than the pipeline.

The default logic will continue to be used if no branch is specified when selecting the template. But, if a specific branch is picked while selecting the template, then templates are always picked from the specified branch only.

Is there a way to generate a dynamic file with some information in one stage of the pipeline and consume that file content in a different pipeline stage?

For CI:

Go to this Documentation for more details.

For CD:

You can use API to create a file in Harness file store and then refer it to other stage. For more details, go to API documentation.

Or, you can just write a file on the delegate and use the same delegate.

How to test Harness entities (service, infra, environment) changes through automation?

Harness by default will not let the user push something or create any entity which is not supported or is incorrect as our YAML validator always makes sure the entity is corrected in the right format.

You can use YAML lint to verify the YAML format of the entity. There is no way to perform testing (automation testing, unit testing, etc.) of Harness entities before releasing any change within those entities.

What kind of order do we apply to the Docker Tags as part of the artifact we show for the users?

Except for the latest version of Nexus, it is in alphabetical order.

Is there a way to use a pipeline within a pipeline in a template?

We do not support this, nor do we plan to at this time due to the complexity already with step, stage, and pipeline templates being nested within each other.

Resolving inputs across those levels is very expensive and difficult to manage for end users.

In Harness can we refer to a secret created in org in the account level connector?

No, higher-level entity can refer to lower-scoped entities. For example, we cannot refer to a secret created in org in the account level connector.

Do we have multi-select for inputs in NG as we had in FG?

Multiple selection is allowed for runtime inputs defined for pipelines, stages, and Shell Script variables. You must specify the allowed values in the input as mentioned in the above examples.

The multiple selection functionality is currently behind the feature flag, PIE_MULTISELECT_AND_COMMA_IN_ALLOWED_VALUES. Contact Harness Support to enable the feature.

How to view Deployment history (Artifact SHA) for a single service on an environment?

You can go to Service under the project --> Summary will show you the details with what artifact version and environment.

Question about deployToAll yaml field, The pipeline yaml for the environment contains deployToAll field. What does that field do?

The field is used when you use the deploy to multiple infrastructures option. This field is for deploy to all infra inside an environment. Documentation.

Can we use variables in the vault path to update the location dynamically based on environment?

A expression can be used in the URL, for example - Setting up a PATH variable in the pipeline and calling that variable in the get secret - echo "text secret is: " <+secrets.getValue(<+pipeline.variables.test>)>

Can we add a delay of n minutes before a pipeline is invoked via trigger?

We don't have any timer for the trigger. It will trigger the pipeline whenever a change is made in the master branch. Since this is a webhook.

As a workaround, a shell script can be added to sleep for 10 mins or n mins as per requirements

How can I manually launch a pipeline which has conditional execution based on trigger data?

Pipeline will run into an error because trigger basesd expression will be null.

We can add a workaround, instead of adding the condition such as - <+trigger.event> == "PR", set it to a variable, pass the variable value at runtime, and set the default value as <+trigger.event> == "PR", so when the pipeline is executed with a trigger default value is passed and it while executing it manually, you can set it as false to skip the condition of this execution.

what are PerpetualTask?

PerpetualTasks" refers to any task that is running on the delegate continuously and lasting indefinitely. All the tasks have task id, ex - rCp6RpjYTK-Q4WKqcxalsA associated with it, we can filter the delegate logs based on the task ID and we can check what step is continuously failing at the delegate, it could be reading secrets from the vault or taking a lock over some resource.

Is it possible to disable First Generation?

Yes, You should see the toggle "Allow Harness First generation Access" setting in NG Account Overview UI. Use this to enable and disable the first gen access

How do I use OPA policy to enforce environment type for each deployment stage in a pipeline i.e. prod or preprod?

The infra details are passed as stage specs.

For example, to access the environment type, the path would be - input.pipeline.stages[0].stage.spec.infrastructure.environment.type

You will have to loop across all the stages to check its infra spec.

What is the purpose of overriding Service Variables in the Environment configured in the Stage Harness?

Overriding Service Variables allows you to modify or specify specific values for your services in a particular environment or stage, ensuring that each deployment uses the appropriate configurations.

How to get Bearer token to make Web API calls?

You can get the bear token from the "acl" network request. Open the network tab and search for acl and check the request headers. You will find the bearer token under Authorization.

In pipeline template variable location is there any option to move or place the variables according to our requirements?

You can modify the YAML file to change the variable order. Currently, moving the variable order is not supported in UI.

The delegates set PROXY_HOST and PROXY_PORT, which is different from HTTP_PROXY in CI step?

Yes, we use the PROXY_HOST and PROXY_PORT variable values to build the HTTP_PROXY (or HTTPS_PROX)Y environment variable and inject it.

Can I add CI/CD steps to customer stage?

Native CI and CD steps are not supported for custom stage, These steps cannot be added via UI. Adding them manually will result in an error while running the pipeline - "Stage details sweeping output cannot be empty".

What kind of payload type is supported for policy step?

Policy step is only supported against a JSON payload.

How to achieve Parallel Execution of Deploy one service to multiple Infrastructures?

You can add maxConcurrency: X in the repeat strategy, which is the number of concurrent instances running at a time. eg - if maxConcurrency: 5, it will run 5 concurrent/parallel step/stage.

Do we support expression for Harness Variable?

We do not support expression for Harness variables currently created at project account or org level. Only fixed values are currently supported.

How to properly pass tag inputs in API call for Harness Filestore?

For Harness file store tags are key value pairs and hence they need to be specified in the similar way , below is an example of how this needs to be specified:

tags=[{"key":"tag","value":"value"}]

How do I override Service Variables in a Harness Environment within a Stage?

You can override Service Variables in Harness by navigating to the specific Environment within a Stage configuration and then editing the Environment's settings. You can specify new values for the Service Variables in the Environment settings.

Can I override Service Variables for only certain services within an Environment?

You can selectively override Service Variables for specific services within an Environment.

What happens if I don't override Service Variables for a specific Environment in a Stage?

If you don't override Service Variables for a particular Environment in a Stage, the values defined at the Service level will be used as the default configuration. This can be useful for consistent settings across multiple Environments.

Can I revert or undo the overrides for Service Variables in an Environment?

You can revert or undo the overrides for Service Variables in an Environment anytime you can revert variables to their default values.

What are some common use cases for overriding Service Variables in an Environment?

- Environment-specific configurations: Tailoring database connection strings, API endpoints, or resource sizes for different environments (e.g., dev, staging, production).

- Scaling: Adjusting resource allocation and load balancer settings for different deployment environments.

Where can I find more information and documentation on overriding Service Variables in Harness?

You can find detailed documentation and resources on how to override Service Variables in Harness here: Documentation

Can I use Harness to manage environment-specific configurations for my Cloud Functions?

Yes, Harness supports environment-specific configurations for your functions. You can use Harness secrets management to store sensitive information, such as API keys or database credentials, and inject them into your Cloud Functions during deployment.

Can I control sequence of serial and parallel in Multi Services/Environments ?

No, we cannot control the sequence for Multi Services/Environment deployments. Please refer more on this in the following Documentation

Is it possible to add variables at the Infrastructure Definition level?

As of now, Harness does not provide direct support for variables within infrastructure definitions. However, you can achieve a similar outcome by using tags in the form of key:value. For example, you can define a tag like region:us-east and reference it using the following expression: <+infra.tags.region>.

How do I propagate an environment's namespace to another stage?

By using the following expression on the target stage, you will be able to propagate the namespace. Expression: <+pipeline.stages.STAGE_IDENTIFIER.spec.infrastructure.output.namespace>

How do I dynamically load values.yaml per environment?

Many of Harness's fields allow you to switch from a static field to an expression field. In your Helm chart/kubernetes manifests declaration, you can switch the values field to an expression field and use an expression like <+env.name>-values.yaml. Then, in your repository, create a value per environment.

When making a change to a template, do we have to manually go through all the places that template is referenced and run “reconcile”?

Yes, it is expected design behaviour. For more details, go to Documentation.

How can a customer do migrating of Service Override for Environments for large configurations?

- Terraform or APIs Used for Initial Configuration: If the customer initially created the Harness configuration using Terraform, they can easily change the organization identifier by modifying the configuration file. Likewise, if APIs were used for the initial configuration, the same approach applies to change the organization identifier.

- Creation from UI: If the customer originally created the configuration through the user interface (UI), a different process is required. In such cases, the customer can follow these steps:

- Utilize GET APIs to retrieve the existing configuration.

- Create a new configuration for the new organization using the create APIs.

- This allows for the necessary overrides and adaptations as needed for the new organization's requirements.

Please refer more on this in the following documentation: Get Service Overrides and Create Service Overrides.

Is there a specific rationale behind the restriction on using expressions when defining the deployment group for multi-environment deployments ?

Yes, this is indeed a limitation at present. When we initially introduced this feature, it was designed with fixed and runtime input support. Additionally, it's worth noting that we do not currently support passing a list for the service or environment field via an expression.

Is it possible to configure a Step Group to run on only a subset of the VMs in the infrastructure?

No, it is not possible to configure a Step Group to run on only a subset of the VMs in the infrastructure. The VMs are grouped at the Environment/Infrastructure level and cannot be further restricted at the Step Group level.

You would need to apply the restriction at the Step level for each step that needs to run on a subset of the VMs.

Why the "Always Execute this Step” condition does not always run in the CD pipeline?

Always execute step runs regardless of success or failure. But, to trigger this condition on failure, the previous step should be considered as failure. If the error is rolled back, then it is not considered a failure. Hence, the next step's conditional execution is not executed. Therefore, a failure strategy such as “Mark as Failure” or "Ignore Failure" is required.

Do we support services and envs at the org level?

Yes, we do. For more please refer this in following Documentation.

Can Expressions operate within Harness Variables for configurations at the account level in the Next-Gen version?

No, higher level entity cannot refer to lower scoped entities. Please refer more on this in following Documentation.

Can we use a pipeline within a pipeline in a template?

No, This is a limitation with templates. We do not support creating pipelines stage templates.

Does an expression retrieve from which branch the pipeline loaded the YAML?

No, there is no such expression which will always show from which branch the pipeline yaml was loaded.

Can we run two input sets of a pipeline together in parallel?

No, it needs to be a different execution every time.

Can we select a delegate and see what steps have ran on it without going into each pipeline execution?

No, we don’t have this capability.

In Harness FirstGen, how can I remove the old plan-file and start again with a fresh plan to make the workflow run successfully?

You can enable the Skip Terraform Refresh when inheriting Terraform plan option.

For variables do we have options to intake parameters via dropdown or radio buttons etc ?

Yes we do, here in the following Documentation , with allowed values you can have multiple inputs to select from range of values allowed.

In fetch pipeline summary API, what does the fields "numOfErrors" and "deployments" mean?

Deployments field has list of number of total executions per day for last 7 days and numOfErrors field has list of number of failed executions per day for last 7 days.

Is there a way I can update the git repo where the pipeline YAML resides?

Yes you can use this API here to update the Git repo of the pipeline.

Is it possible to reference a connectors variable in a pipeline?

We do not support referencing variables/values from the connector into the pipeline.

How do I resolve No eligible delegate(s) in account to execute task. Delegate(s) not supported for task type {TERRAFORMTASKNGV6} error?

Upgrading the delegate to latest version should resolve this issue.

What is MonitoredService?

Monitored service are used for service reliability management. You can find more details on this in following Documentation.

How to convert a variable to Lowercase?

You can use .toLowerCase() for example <+<+stage.variables.ENVIRONMENT>.toLowerCase()> and retry the pipeline.

Can I encrypt the Token/Secret passed in the INIT_SCRIPT?

It cannot be encrypted directly but this can be achieved by creating the k8s secret for the credentials and referring them in the init script.

example -

aws_access_key=kubectl get secrets/pl-credentials --template={{.data.aws_access_key}} | base64 -d

aws_secret_key= kubectl get secrets/pl-credentials --template={{.data.aws_secret_key}} | base64 -d

Another approach would be saving the value in Harness's secret manager/any other secret manager and referencing it in the script. Check for more info in Documentation.

How do I submit a feature request for Harness Platform?

In the documentation portal, scroll down to the bottom of the page, select Resources > Feature Requests. It will lead you to the internal portal: https://ideas.harness.io/ where you can submit a feature request.

Do we need to install jq library in delegate machine or does Harness provide jq by default?

By default, Harness does not provide jq on delegate host. You need to add the below command in your INIT_SCRIPT for this:

microdnf install jq

Explain what freeze window means?

Freeze Window can be setup in Harness with certain rules. No deployments can be run during this window. A freeze window is defined using one or more rules and a schedule. The rules define the Harness orgs, projects, services, and environments to freeze. Deployment freeze does not apply to Harness GitOps PR pipelines. You cannot edit enabled deployment freeze windows. If the deployment freeze window you want to change is enabled, you must first disable it, make your changes, then enable it again.

What Roles are required to edit Pipeline Triggers and Input Sets?

The roles required to edit Pipeline Triggers and Input sets are View and Create / Edit.

If we have multiple services using this same pipeline template, both within and outside the same project, does Harness differentiate each pipeline execution by service? If both service1 and service2 in the same project are using this same pipeline and are sitting at the approval step, would approving the service1 pipeline cause the service2 pipeline to be rejected?

The pipelines will run just fine, as you used the template and specified different services at the runtime , so it will run independently.

Service showing as active but hasn't been part of a deployment in over 30 days.

Harness shows the Active instances is say you had a deployment and the VM got deployed from a Harness deployment. No matter if we deploy anything else on the VM , until the VM is up and running as it is linked with the service. It will show as active instance. The 30 days mentioned here , is for service based licence calculation and usage for CD.

Why can't I access dashboards? It says "Requires Upgrade to Enterprise Plan to set up Dashboards"?

Dashboards requires an Enterprise license for all modules except for the CCM module.

Are there variables for account and company name?

Yes, <+account.name> and <+account.companyName> respectively.

Do we need to escape '{' in manifest for go templating?

The curly brackets are special characters for go and hence we need to escape it. If we do not escape in the manifest the templating will fail to render.

Can we use multiple condition check in conditional execution for stages and steps?

We support having multiple condition check in the conditional execution. If you need to execute the stage based on two condition being true you can make use of AND operator, a sample is below:

<+pipeline.variables.var1>=="value1" & <+pipeline.variables.var2>=="value2"

Can we persist variables in the pipeline after the pipeline run is completed?

We do not persist the variables and the variables are only accessible during the context of execution. You can make api call to write it as harness config file and later access the Harness file or alternatively you have a config file in git where you can push the var using a shell script and later access the same config file.

Can we access harness variable of one pipeline from another pipeline?

One pipeline cannot access the variables of other pipelines. Only values of variable created at project, account and org level can be accessed by pipelines. These values for these type of variables are fixed and cannot be changed by pipelines directly. These variable values can be updated via the UI or API.

How can I turn off FG (First Generation) responses or remove the switch to CG option?

To disable FG responses:

- Go to your account settings.

- Locate the "Allow First Gen Access" option.

- Turn off the "Allow First Gen Access" setting.

- Once disabled, the "Launch First Gen" button will no longer be visible, and you will no longer receive FG responses.

Under what condition does an immutable delegate automatically upgrade?

Auto-upgrade initiates when a new version of the delegate is published, not when the delegate is expired.

Getting an error while evaluating expression/ Expression evaluation fails.

The concatenation in the expression /tmp/spe/<+pipeline.sequenceId> is not working because a part of expression <+pipeline.sequenceId> is integer so the concatenation with /tmp/spec/ is throwing error because for concat, both the values should be string only.

So we can invoke the toString() on the integer value then our expression should work. So the final expression would be /tmp/spe/\<+pipeline.sequenceId.toString()>.

Is it possible to include FirstGen measures and dimensions in custom dashboards using NextGen dashboards?

Yes, NG dashboards support Custom Group (CG) data, and you can create custom dashboards with FirstGen measures and dimensions using the Create Dashboard option.

Can I use the Service Propagation Feature to deploy dev and prod pipelines without changing critical parameters?

Yes, the Service Propagation allows you to provide fixed critical parameters. Please refer more on this in the following Documentation 1 and Documentation 2.

Do we need to manually filter the API response to check if the pipeline was executed by a trigger in NG ?

Yes,Harness NG uses REST APIs not graphql, this means that we need to review the api calls they are making and provide them the api endpoints that are parity.

What steps are involved in obtaining output from a chained pipeline for use in a different stage?

To get output from a chained pipeline and utilize it in another stage, you need to specify the expression of the output variable for the chained pipeline at the parent pipeline level in the output section.

Do we have the export manifests option in NG like we have in CG?

No, we have a dry-run step, that will export manifest for customer to use in other steps, but there is no option to inherit manifest.Please refer more on this in the following Documentation

What YAML parser is being used for harness YAML, Pipelines, or Templates?

We have a YAML schema available on GitHub that you can pull into your IDE for validation. It is available on Github Repository and one can look at Jackson as well.

It has usages as following:

- The schema makes it easy for users to write pipeline and Template YAMLs in their favourite IDE such as IntelliJ/VS. The schema can be imported into the IDE, then used to validate the YAMLs as they are being written and edited.

- The same schema is used internally by Harness to validate YAMLs; so the validation is now standardised.

Can there be a way to select a delegate and see what steps have ran on it without going into each pipeline execution?

No, we don't have this capability.

Do we have an expression to retrieve from which branch the pipeline loaded the YAML?

No, we don't have such an expression which will always show from which branch the pipeline YAML was loaded.

Is there to check the pipeline was ever run in last two years?

As per the current design, the execution history is available up to the past 6 months only.

Is it possible to have drop-down options for multiple input?

You can make the variable as Input and define multiple allowed values by selecting the Allowed Values checkbox.

How to fail a pipeline or step if some condition is not passed In Bash script?

You can set in script set -e to exit immediately when a command fails, or you can set exit code to non-zero if certain conditions match and that should fail the step.

Is there an easy way to see all the recent deployments of a workflow that had run?

You can use deployment filter and select the workflow and time range and you will able to see all the deployment for that workflow within that time range

How do I access one pipeline variables from another pipeline ?

Directly, it may not be possible.

As a workaround, A project or org or account level variable can be created and A shell script can be added in the P1 pipeline after the deployment which can update this variable with the deployment stage status i.e success or failure then the P2 pipeline can access this variable and perform the task based on its value.

The shell script will use this API to update the value of the variable - https://apidocs.harness.io/tag/Variables#operation/updateVariable

What happens when the CPU usage of a delegate exceeds a certain threshold when the DELEGATE_CPU_THRESHOLD env variable is configured?

When CPU usage exceeds a specified threshold with the DELEGATE_CPU_THRESHOLD env variable configured, the delegate rejects the tasks and will not attempt to acquire any new tasks. Instead, it waits until the resource usage decreases.

Will the Delegate crash or shut down if it rejects tasks due to resource usage exceeding the threshold?

No, the Delegate will not crash or shut down when it rejects tasks due to high resource usage. It will remain operational but will not attempt to acquire any new tasks until resource levels decrease.

How does the Delegate handle task acquisition when it's busy due to resource constraints?

Think of the Delegate's behavior as a queue. If the Delegate is busy and cannot acquire tasks due to resource constraints, other eligible Delegates will be given the opportunity to acquire those tasks.

What happens if there are no other eligible Delegates available to acquire tasks when the current Delegate is busy?

If there are no other eligible Delegates available to acquire tasks when the current Delegate is busy, the pipeline will remain in a running state, waiting for a Delegate to become less busy. However, if no Delegate becomes less busy during a specified timeout period, the pipeline may fail.

Is it possible to specify a custom threshold for rejecting tasks based on resource usage?

Yes, you can choose to specify a custom threshold for rejecting tasks based on CPU and memory usage. This threshold is controlled by the DELEGATE_RESOURCE_THRESHOLD configuration. If you don't specify a threshold, the default value of 70% will be used.

How can I pass a value from one pipeline to another in a chained pipeline setup?

You can pass a value from one pipeline to another by using output variables from the first pipeline and setting them as input variables in the second pipeline.

How do I access the value of an output variable from one child pipeline in another child pipeline within a chained pipeline?